RADAR: Robust AI-Text Detection via Adversarial Learning

Xiaomeng Hu (CUHK), Pin-Yu Chen (IBM), Tsung-Yi Ho (CUHK)

Demo by: Hendrik Strobelt(IBM, MIT), Ben Hoover(IBM, GATech)

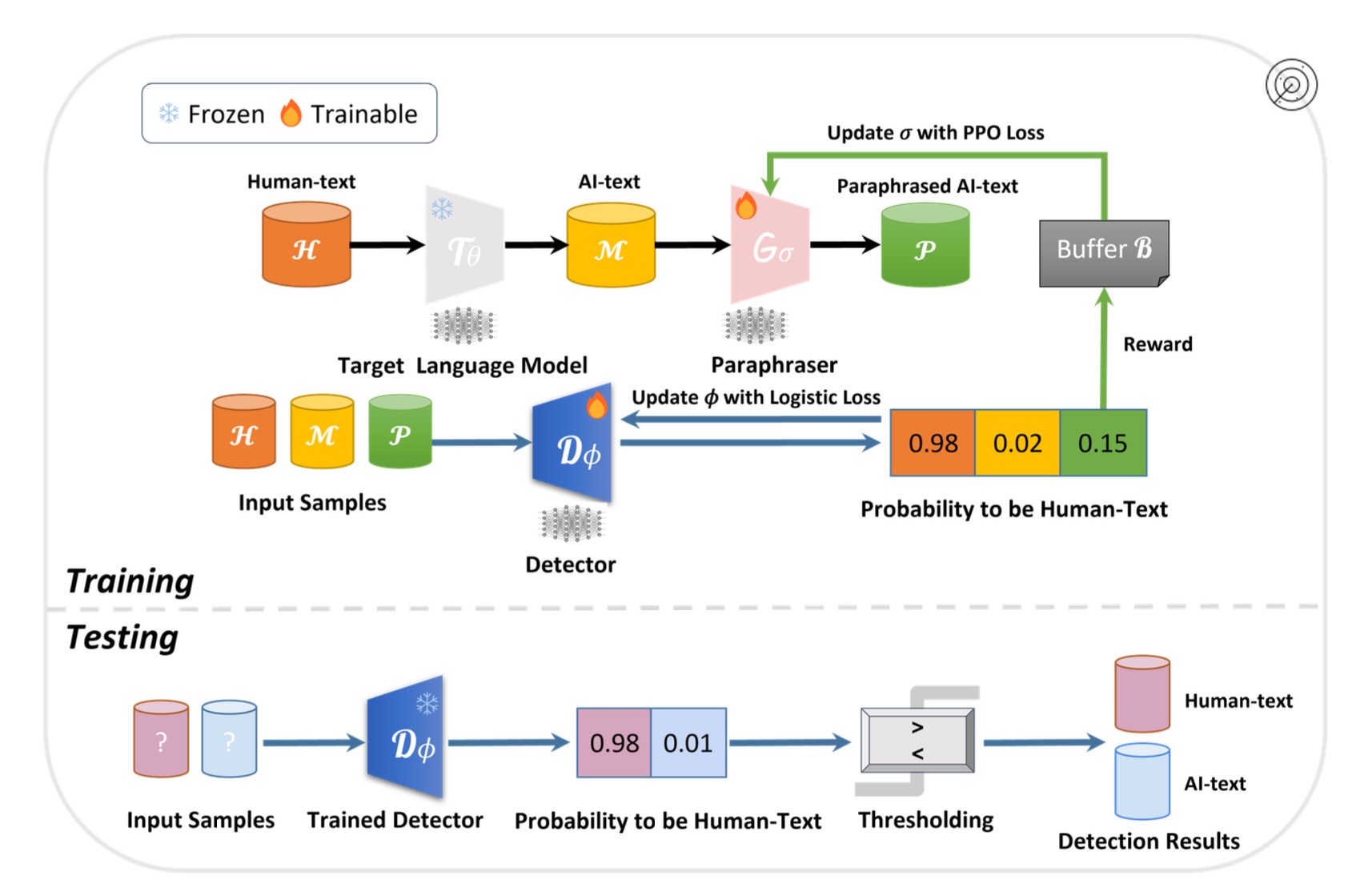

Recent advances in large language models (LLMs) and the intensifying popular-it of ChatGPT-like applications have blurred the boundary of high-quality text generation between humans and machines. However, in addition to the anticipated revolutionary changes to our technology and society, the difficulty of distinguishing LLM-generated texts (AI-text) from human-generated texts poses new challenges of misuse and fairness, such as fake content generation, plagiarism, and false accusation of innocent writers. While existing works show that current AI-text detectors are not robust to LLM-based paraphrasing, this paper aims to bridge this gap by proposing a new framework called RADAR, which jointly trains a robust AI-text detector via adversarial learning. RADAR is based on adversarial training of a paraphraser and a detector. The paraphraser's goal is to generate realistic contents to evade Al-text detection. RADAR uses the feedback from the detector to update the paraphraser, and vice versa. Evaluated with 8 different LLMs (Pythia, Dolly 2.0, Palmyra, Camel, GPT-J, Dolly 1.0, LLaMA, and Vicuna) across 4 datasets, experimental results show that RADAR significantly outperforms existing AI-text detection methods, especially when paraphrasing is in place. We also identify the strong transferability of RADAR from instruction-tuned LLMs to other LLMs, and evaluate the improved capability of RADAR via GPT-3.5.

Citation

@article{hu2023radar,

title={RADAR: Robust AI-Text Detection via Adversarial Learning},

author={Xiaomeng Hu and Pin-Yu Chen and Tsung-Yi Ho},

year={2023},

journal={Advances in Neural Information Processing Systems},

}